App Rationalization

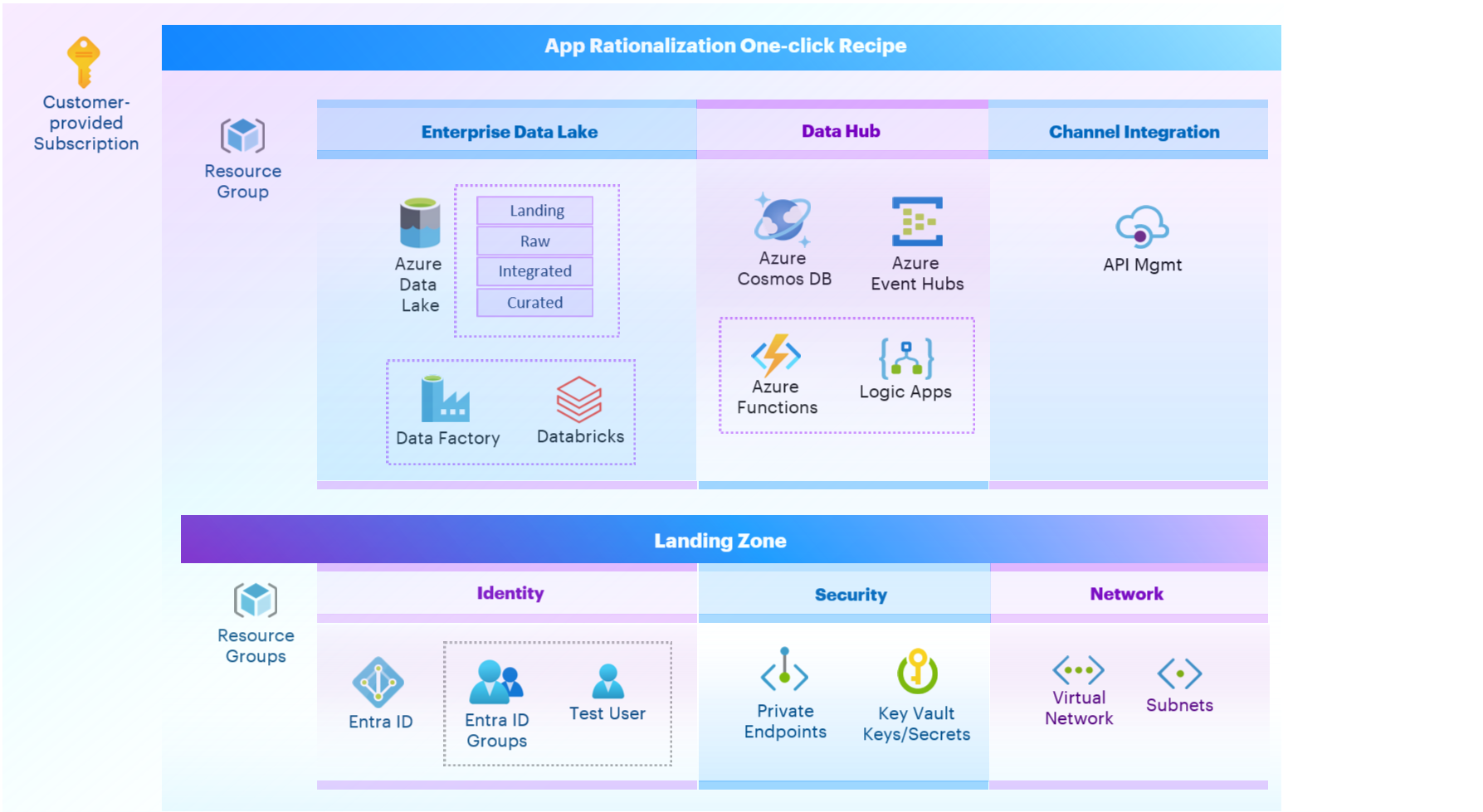

This recipe provisions Enterprise Data Lake, Data Hub and Channel integration.You can choose whether to deploy through the console directly or on download as Terraform or GitHub to deploy later.

Estimated deployment time - 30mins

Components

Applying App Rationalization one-click recipe will deploy following components inside of customer-provided Subscription.

Resource group with App Rationalization components:

-

Enterprise Data Lake:

- ADLS Gen2 with containers for data storage

- Azure Data Factory for orchestration

- Azure Databricks for data transformation

-

Data Hub

- Azure Cosmos DB as real-time database

- Azure Event Hubs for event detection

- Azure Functions and Logic Apps for message processing

-

Channel Integration

- Azure API Management

- Landing Zone components and settings:

- Entra ID groups and test user

-

Security components:

- - Private endpoints for corresponding services

- - KeyVault keys and secrets

- Network Landing Zone components:

- - Virtual Network with Subnets

Post-deployment Steps

1. Create data Databricks notebooks to load data from sources and process data.

2. Create Azure Data Factory orchestration for Databricks notebooks execution.

3. Create Logic Apps and/or Azure Function Apps to insert messages to Cosmos DB.

4. Create Logic Apps and/or Azure Function Apps to expose information from Cosmos DB to external applications.

5. Use Azure API Management to expose APIs for partner or enterprise systems.

Reference Architecture

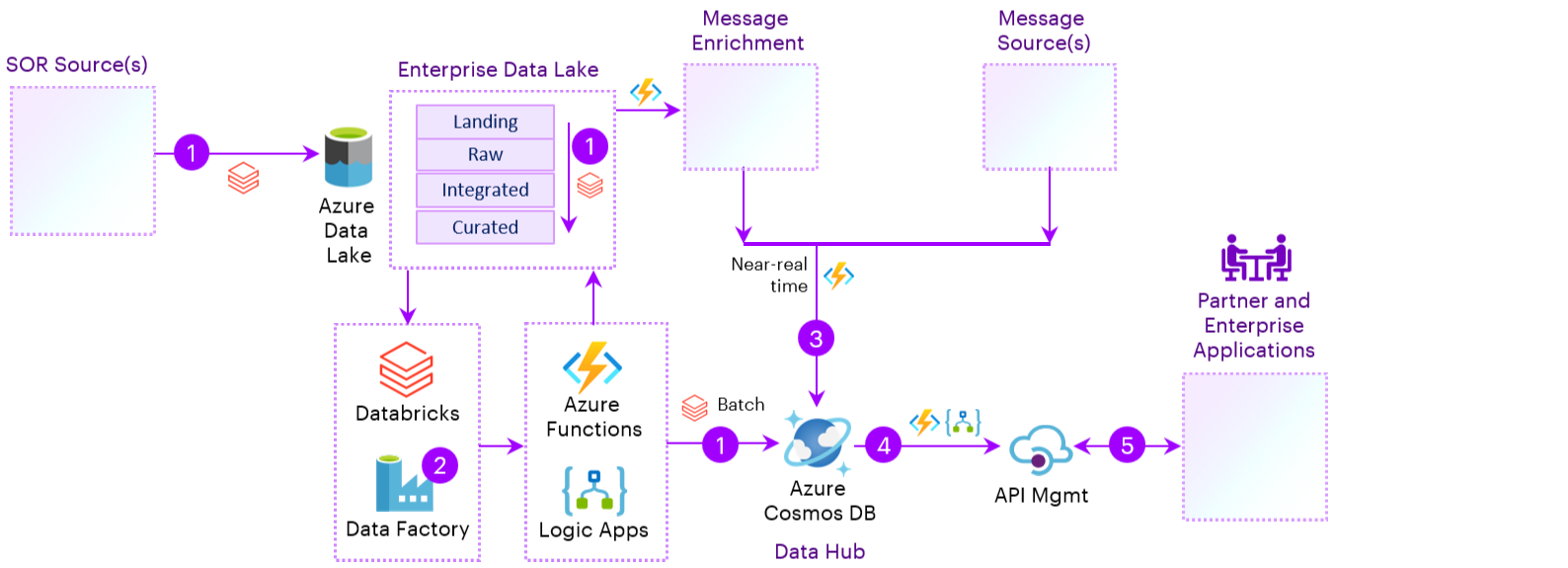

Applying App Rationalization one-click recipe allows to create components for near-real time integration with Partner and Enterprise applications based on Data Hub as NRT database, Data Lake for batch processing and components for API deployment and management.This recipe is readily available for Channel partner consumption (for e.g: Open Banking).

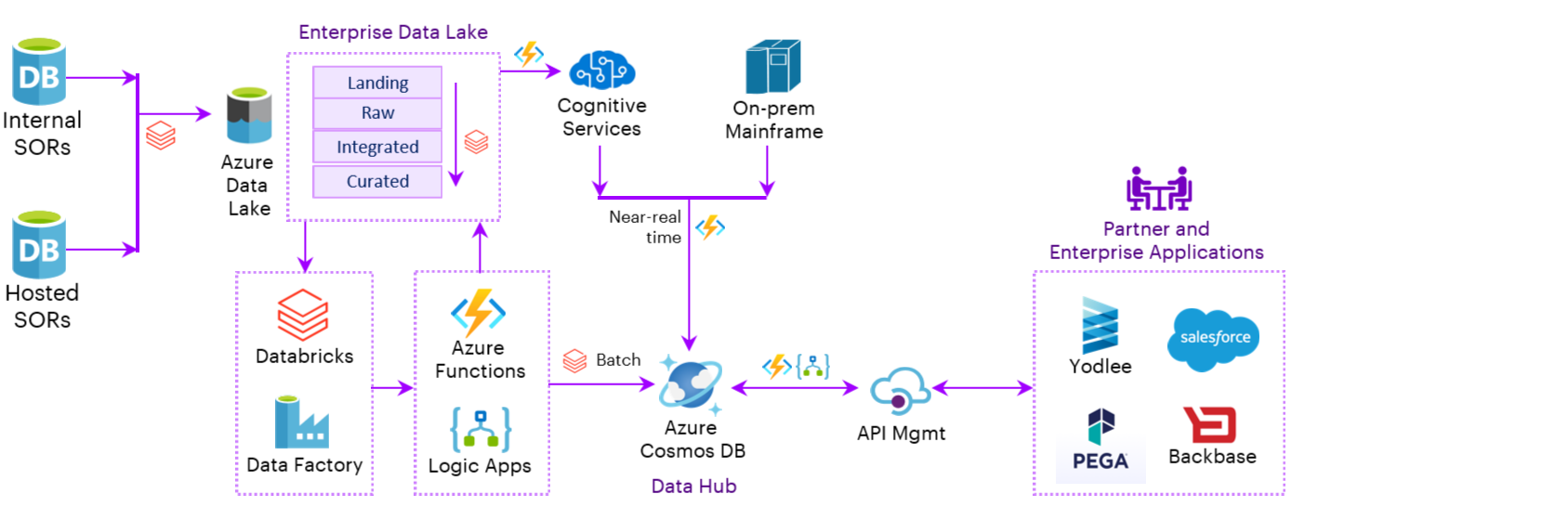

1. SOR’s are called System of records, that are the first generation of data. SOR’s from different platforms are collected and is given as input to EDL platform.

2. In EDL, we apply the PI handling, reduction, syncing, batching on the data. Segregate it and store it in a daily and monthly fashion.

3. In data hub, we take the copy of the Raw container into Cognitive services, that stores data as Archive data.

4. Blend is a platform that generates modular components and automated workflows for all the Banks. This Blend platform along with large collection of mainframe files are as well given as input to Data Hub Cosmos DB.

5. In channel integration, Azure Logic Apps simplifies the way that you connect legacy, modern, and cutting-edge systems across cloud, on premises, and hybrid environments and provides low-code-no-code tools for you to develop highly scalable integration solutions for your enterprise and business-to-business (B2B) scenarios.

Partner and enterprise application, for example:

- Backbase provides solutions for retail banking, business banking and wealth management.

- Pega is a low-code platform for workflow automation and AI-powered decisioning.

- Yodlee is a platform that provides account aggregation – consolidating information from multiple accounts, e.g. credit card, bank, investment, email, travel rewards, etc., on one screen.

- Salesforce provides data on sales, customer service, marketing automation, e-commerce, analytics, and application development.

7. We collect data from all these platforms , store them and process them in Channel integration, and later use Power BI for Analytics and Reporting.